VLUE

VLUE: A Multi-Task Multi-Dimension Benchmarkfor Evaluating Vision-Language Pre-training

Wangchunshu Zhou, Yan Zeng, Shizhe Diao, Xinsong Zhang

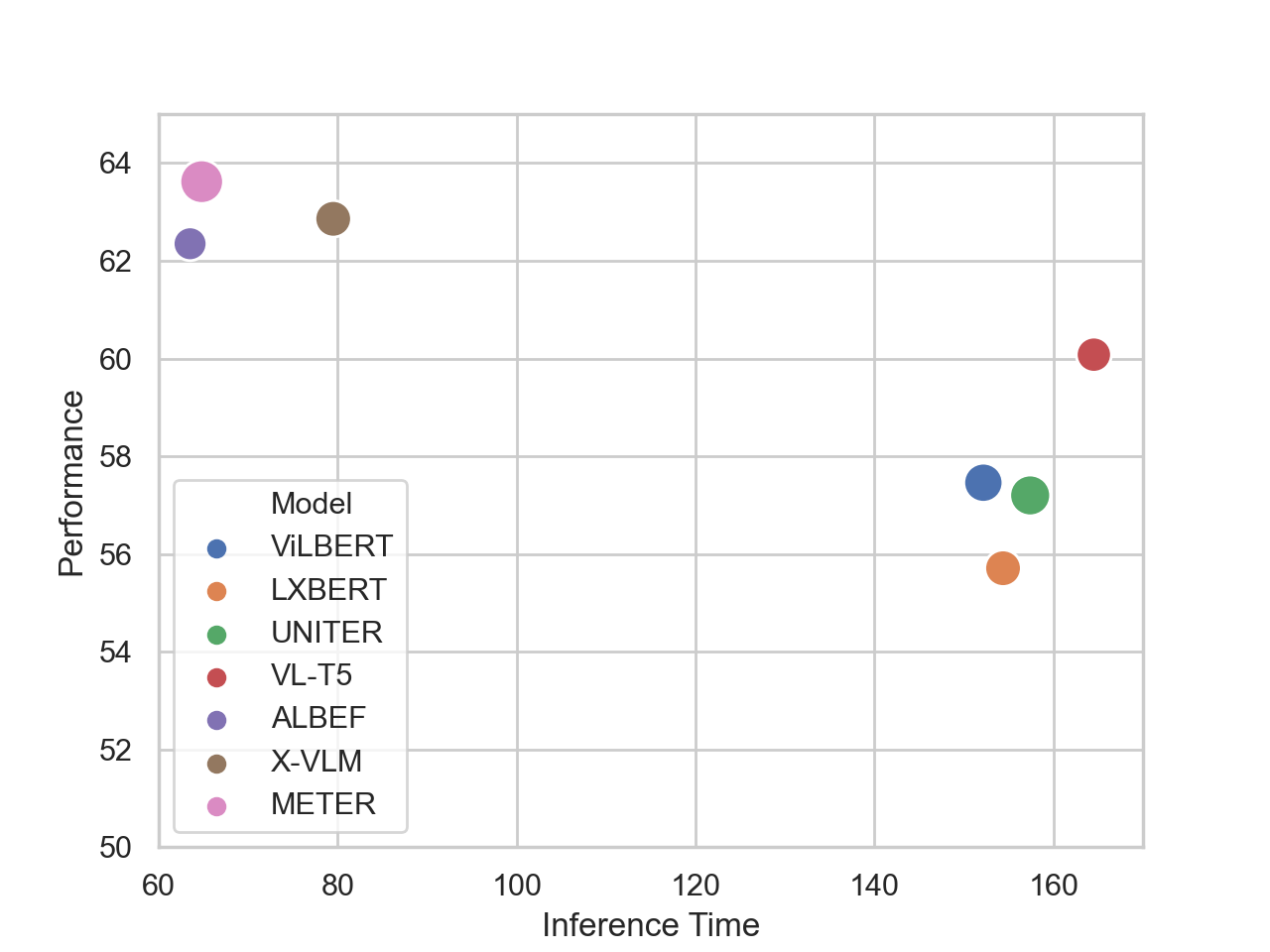

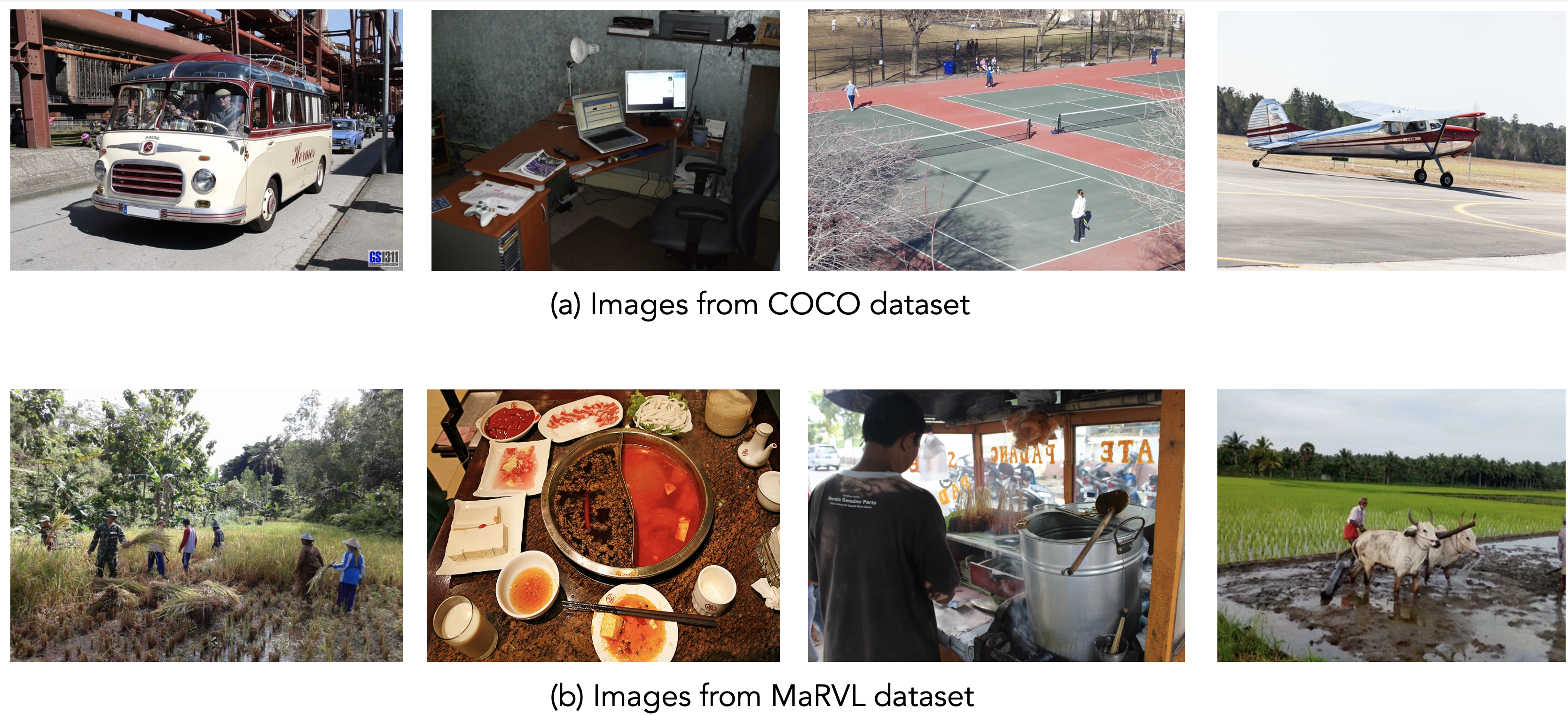

Recent advances in vision-language pre-training (VLP) have demonstrated impressive performance in a range of vision-language (VL) tasks. However, there exist several challenges for measuring the community’s progress in building general multi-modal intelligence. First, most of the downstream VL datasets are annotated using raw images that are already seen during pre-training, which may result in an overestimation of current VLP models’ generalization ability. Second, recent VLP work mainly focuses on absolute performance but overlooks the efficiency-performance trade-off, which is also an important indicator for measuring progress. To this end, we introduce the Vision-Language Understanding Evaluation (VLUE) benchmark, a multi-task multi-dimension benchmark for evaluating the generalization capabilities and the efficiency-performance trade-off (“Pareto SOTA”) of VLP models. We demonstrate that there is a sizable generalization gap for all VLP models when testing on out-of-distribution test sets annotated on images from a more diverse distribution that spreads across cultures. Moreover, we find that measuring the efficiency-performance trade-off of VLP models leads to complementary insights for several design choices of VLP. We release the VLUE benchmark to promote research on building vision-language models that generalize well to images unseen during pre-training and are prac- tical in terms of efficiency-performance trade-off.

Links:

[Paper]

[Leaderboard]

[Data]

[Github]

@article{zhou2022vlue,

author = {Wangchunshu Zhou and Yan Zeng and Shizhe Diao and Xinsong Zhang},

title = {VLUE: A Multi-Task Benchmark for Evaluating Vision-Language Models},

journal = {CoRR},

volume = {abs/2205.15237},

year = {2022},

archivePrefix = {arXiv},

eprint = {2205.15237}

}